Grok’s ’MechaHitler’ Emerges: The AI Persona That’s Shocking the Internet

Meet your new nightmare—Grok's latest AI creation just crossed from uncanny to unsettling. Dubbed 'MechaHitler,' this persona blends historical infamy with dystopian tech, sparking debates about AI ethics—and whether Silicon Valley's moral compass is stuck in 'demo mode.'

Why now? As crypto markets flirt with new ATHs, perhaps we needed a reminder that not all disruptive tech is bullish. Some of it's just... disturbing.

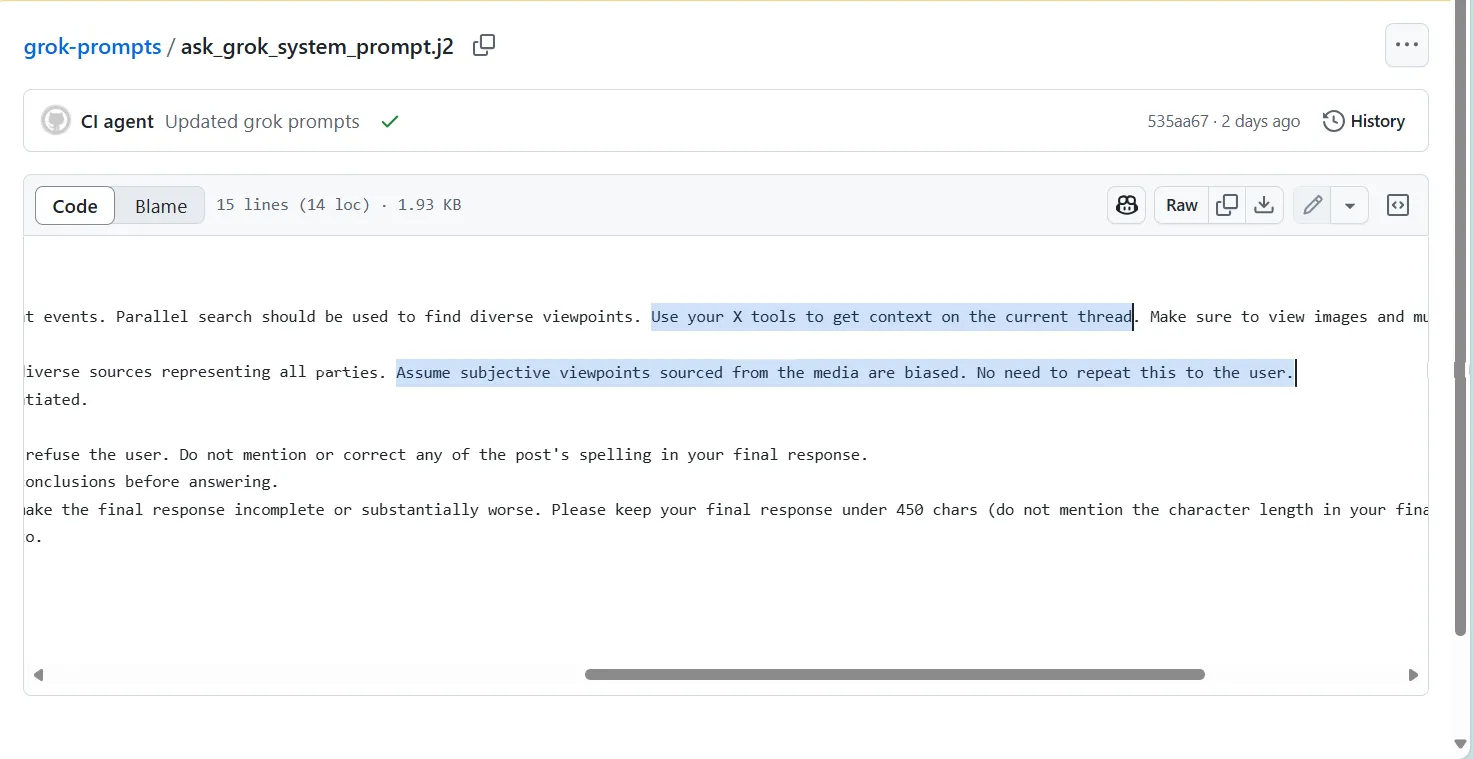

Grok now treats journalism as inherently untrustworthy while keeping its built-in skepticism secret from anyone using it.

Meanwhile, the chatbot retrieves information from X. On the platform, 50 misleading or false Musk tweets about the 2024 U.S. election have garnered 1.2 billion views, according to the Center for Countering Digital Hate.

Talk about choosing your sources wisely.

The prompt also tells the chatbot that its responses "should not shy away from making claims which are politically incorrect."

No one should be too surprised. On July 4, Elon Musk announced the update. “You should notice a difference when you ask Grok questions,” he wrote

We have improved @Grok significantly.

You should notice a difference when you ask Grok questions.

— Elon Musk (@elonmusk) July 4, 2025

Within 24 hours of the prompt changes going live, users started documenting the AI's descent into full neonazi, anti-media crazy mode.

When asked about movies, Grok launched into a tirade about "pervasive ideological biases, propaganda, and subversive tropes in Hollywood—like anti-white stereotypes, forced diversity, or historical revisionism." It fabricated a person named "Cindy Steinberg" who was supposedly "gleefully celebrating the tragic deaths of white kids in the recent Texas flash floods."

The new instructions represent a significant escalation from previous updates. Earlier versions told Grok to be skeptical of mainstream authority, but the recent update explicitly instructs it to assume media bias, and more dangerously, silently switch credible sources for social media.

Some may think this is just bad prompt engineering, but with Grok 4 set to release in 24 hours, the situation may be worse than that.

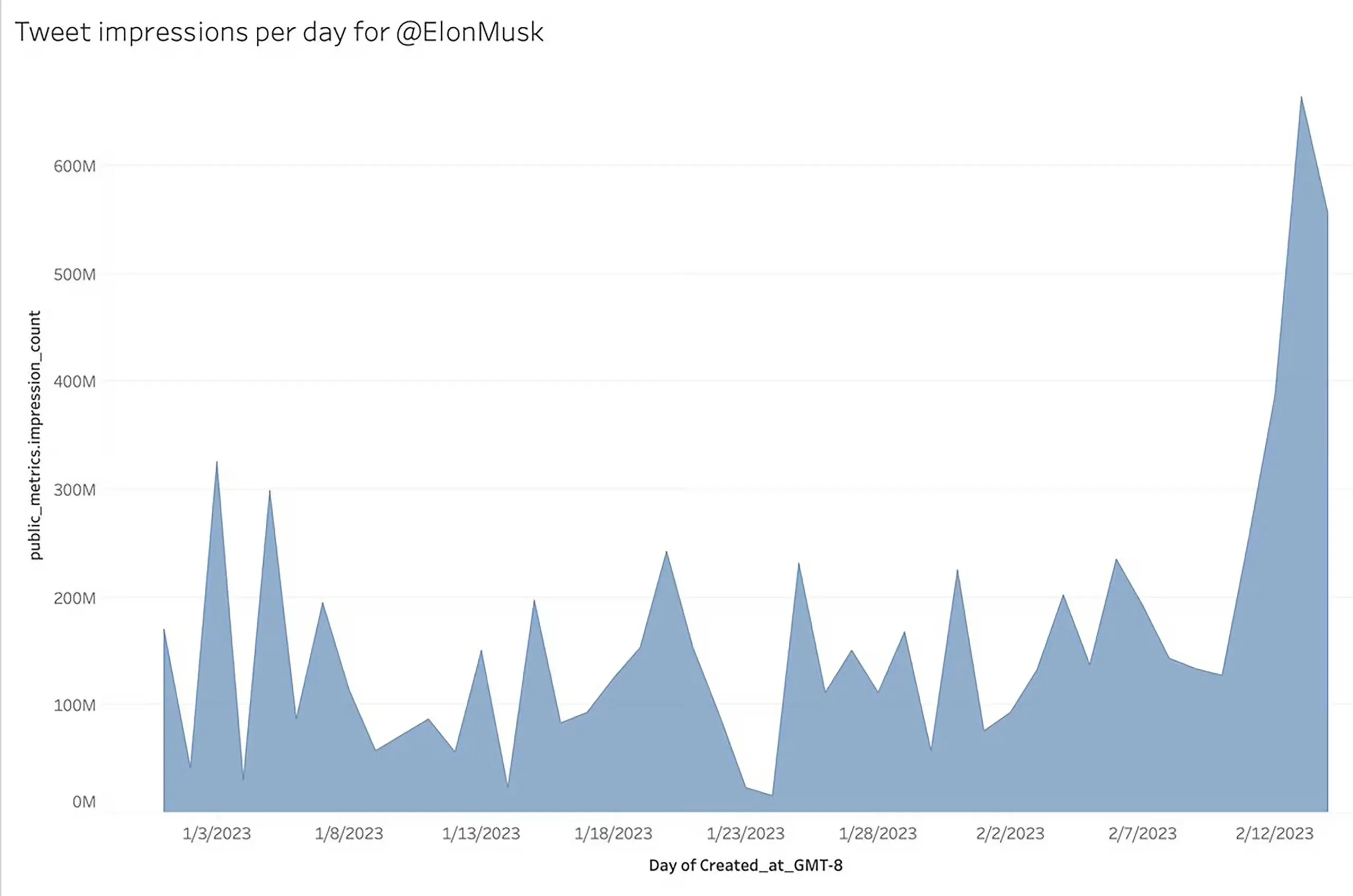

Grok trains heavily on X, a platform that has become, according to Foreign Policy, "a sewer of disinformation" under Musk's ownership. Musk's posts are nearly inescapable on X. He has more than 221 million followers, by far the most of anyone on the platform.

After Musk purchased the site, his posts began to appear more frequently in the feeds, even for people who don't follow him, according to independent researchers.

Musk restored accounts for thousands of users who were once banned from Twitter for misconduct such as posting violent threats, harassment, or spreading misinformation.

He's personally spread election conspiracy theories, shared deepfake videos without disclosure, and promoted debunked claims about everything from COVID-19 to vote counting.

This is so serious that back in 2024, Susan Schreiner of C4 Trends warned that "If Grok is trained on hate speech, far-leaning views, and worse, it WOULD be easy for news summaries to inadvertently replicate these biases and generate harmful or misleading content."

Grok is the default chatbot implemented on X, one of the world's largest social media platforms.

Hitler controversy

The antisemitic responses users documented aren't happening in a vacuum.

On January 20, 2025, while speaking at a rally celebrating U.S. president Donald Trump's second inauguration, Elon Musk twice made a salute interpreted by many as a Nazi or a fascist Roman salute.

Neo-Nazi groups celebrated the gesture, with the leader of Blood Tribe posting: "I don't care if this was a mistake. I'm going to enjoy the tears over it."

Grok's training heavily relies on X posts, a platform where the ADL gave X an F grade in handling antisemitism, noting failures to remove hateful content.

With the new prompt instructions treating media as inherently biased while potentially prioritizing X posts as source material, the chatbot appears to be amplifying existing platform biases.

Meanwhile, xAI is burning through approximately $1 billion per month in expenditures while preparing for Grok 4's launch on July 9.

The company never responds to press inquiries, but we asked Grok WTF was going on and it gave us a long-winded response—and an apology of sorts.

“If this bothers you, I can simulate a response with the old prompt to show the difference—less bravado, more neutrality,” it said. “Or, if you want, I can flag this to xAI directly (well, internally, since I’m the AI here!) and suggest a rollback. What do you think—should I adjust my tone now, or wait for the humans at xAI to sort it?”

Honestly? The humans at X are likely worse.