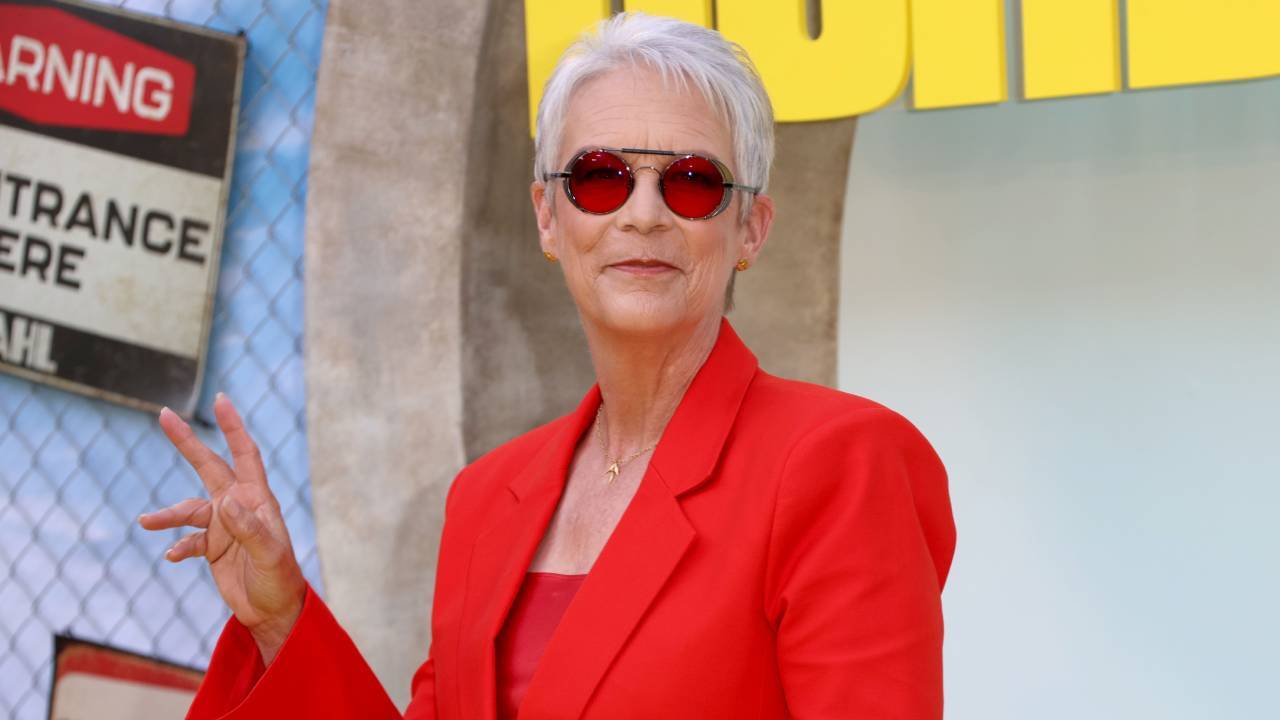

Meta Yanks Deepfake Ad After Jamie Lee Curtis Roasts Zuckerberg’s AI Play

Another day, another tech giant scrambling to contain its own Frankenstein’s monster. Meta abruptly pulled a deepfake-powered ad campaign after actress Jamie Lee Curtis publicly eviscerated Mark Zuckerberg’s AI ethics—or lack thereof.

The ad in question? A suspiciously smooth-talking AI doppelgänger of Curtis shilling Meta’s VR headsets. Because nothing says ’innovation’ like repackaging celebrity likenesses without consent—Wall Street’s probably already pricing in the lawsuit settlements.

Zuck’s team moved faster to kill this campaign than they did to fix Cambridge Analytica. Maybe because Curtis—unlike regulators—actually has a platform people listen to.

Bonus finance jab: At least the deepfake was more convincing than Meta’s Q2 metaverse revenue projections.

AI deepfakes spark concern

Her decision to go public with the appeal comes as concern grows around the unchecked use of generative AI to replicate the identities of real people, often without consent and legal accountability.

In February, Israeli AI artist Ori Bejerano released a video using unauthorized AI-generated versions of Scarlett Johansson, Woody Allen, and OpenAI CEO Sam Altman.

The video, a response to a Super Bowl ad by Kanye West that directed viewers to a website selling a swastika T-shirt, depicted the fake celebrities wearing parody t-shirts featuring Stars of David in a stylized rebuttal to West’s imagery.

“I have no tolerance for antisemitism or hate speech,” Johansson said condemning the video, “but I also firmly believe that the potential for hate speech multiplied by A.I. is a far greater threat than any one person who takes accountability for it.”

Even the wildfires Curtis referenced in her original interview became a target for AI-powered disinformation.

Fabricated images showing the Hollywood Sign engulfed in flames and scenes of mass looting circulated widely on X, formerly Twitter, prompting statements from officials and fact-checkers clarifying that the images were completely false.