GPT-5 Countdown Begins: Breaking Down the Hype, Hard Limits, and What Comes Next

OpenAI’s next-gen model is almost here—but don’t expect miracles.

### The Good, the Bad, and the ‘Artificial’

Early benchmarks show GPT-5 crushing coding tasks and acing law exams. Yet it still hallucinates facts like a crypto influencer pumping a memecoin.

### Wall Street’s Already Overhyping It

VCs are throwing money at ‘AI-powered’ startups like it’s 2021 NFT season. Most will burn out faster than a Solana validator during congestion.

### What’s Actually New?

Faster reasoning? Yes. Human-level common sense? Not even close. The real win: it finally stops writing Python like a finance bro trying to code after three Red Bulls.

### The Bottom Line

GPT-5 isn’t Skynet—it’s just a sharper tool. And like all tools, it’ll be wielded for genius and grift in equal measure.

In the months leading up to the expected debut of GPT-5 this week, anticipation in both the technology sector and the broader business community has risen noticeably. OpenAI Chief Executive Sam Altman has continued to frame artificial-general-intelligence research as a civilisation-scale endeavour, most recently describing the current era as a “gentle singularity.” Such language naturally fuels speculation that GPT-5 will represent a step change rather than an incremental update. Yet the concrete examples Altman has shared, drafting a complex email or curating a thoughtful list of AI-themed television programmes, suggest a narrower, more evolutionary advance.

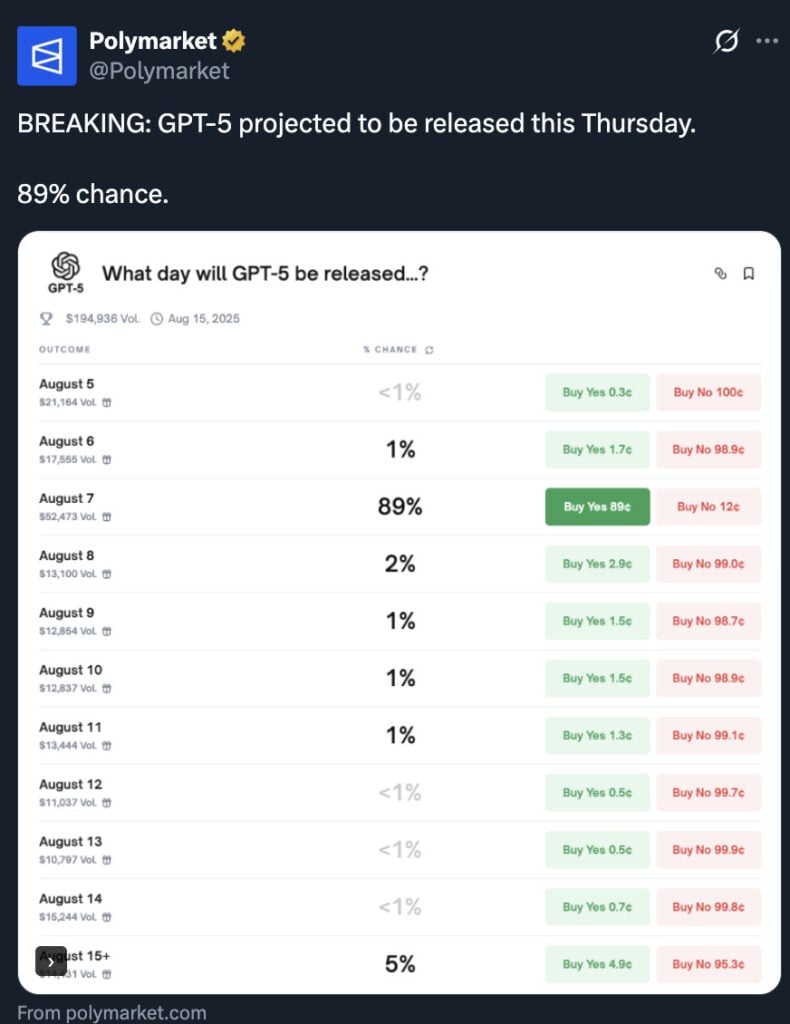

Polymarket suggests an 89% chance GPT-5 is released on Thursday, Source: X

2. What Insiders Say About Technical Gains

Reporting from industry sources indicates that OpenAI’s internal code-named build, “Orion,” was deemed insufficient for the GPT-5 label and ultimately shipped as GPT-4.5. According to engineers familiar with the training runs, the new model improves most noticeably in mathematical reasoning and software-code generation, while showing only moderate gains in open-ended conversation and general knowledge retrieval.

Two structural challenges appear to constrain further leaps:

These factors do not imply stagnation; rather, they signal that architectural innovation, mixture-of-experts routing, modular training, or entirely new model classes, will likely be required to regain earlier momentum.

3. Durability and Model Drift

Multiple benchmark studies now show that large language models can degrade over time when asked to perform repetitive, long-horizon tasks. A recent evaluation of accounting workflows found error rates creeping into double digits within a year of deployment, with some models entering repetitive loops that prevented task completion. If GPT-5 exhibits similar drift, mission-critical domains such as finance, compliance, and safety engineering will still require careful human oversight.

4. Commercial Context and Capital Expenditure

OpenAI’s financial profile illustrates the scale of investment behind these models. Annualised revenue has surpassed twelve billion dollars, but projected cash burn for 2025 remains close to eight billion, driven largely by purchases of high-end compute clusters and accompanying energy costs. Market enthusiasm continues unabated: a fundraising round of up to forty billion dollars is reportedly in motion, and speculation about a 2026 public listing persists.

For investors, the calculus is straightforward: each successive model that broadens the paying customer base and deepens engagement lengthens the company’s runway, offsetting the heavy capital intensity of cutting-edge research. For OpenAI, the implicit mandate is equally clear, translate research achievements into robust, revenue-generating products rapidly enough to validate those expenditures.

5. Competitive Landscape

External pressure is mounting. Anthropic’s Claude 4, Google’s Gemini Ultra, and xAI’s Grok family each challenge GPT-4 in at least one performance dimension. Meanwhile, open-source models now exceed two hundred billion parameters and offer researchers freedom to inspect and modify weights. Any advantage GPT-5 introduces may narrow more quickly than in previous cycles unless it delivers a distinctly different capability profile.

6. Practical Expectations for GPT-5

A disciplined forecast of the initial production release WOULD include:

| Capability Area | Plausible Outcome in GPT-5 v1.0 |

| Reasoning depth | Noticeable but moderate improvement; fewer dead-ends in chain-of-thought tasks |

| Code generation | Higher benchmark pass rates; real-world bug density reduced but not eliminated |

| Knowledge freshness | Continued reliance on retrieval-augmented pipelines rather than native, live data |

| Long-term consistency | Gradual performance decay likely without active reinforcement or fine-tuning |

In other words, GPT-5 should be viewed as a significant refinement rather than a transformational leap similar to the jump from GPT-3 to GPT-4.

7. Looking Beyond This Release

Sam Altman has suggested that the current transformer-based paradigm can deliver “three or four” additional generations of meaningful improvement. If GPT-5 is counted among them, the horizon for scale-driven progress may extend only to GPT-8. Whether subsequent breakthroughs will be secured through novel architectures, enhanced data-engineer pipelines, or entirely new forms of neuro-symbolic computation remains an open question.

9. Conclusion

GPT-5 is poised to advance the state of large language models in meaningful, but measured, ways. The model’s release will almost certainly deliver sharper mathematical reasoning, cleaner code generation, and a smoother conversational experience. Yet expectations of a categorical leap toward artificial general intelligence are premature. For the foreseeable future, progress will remain incremental, and the most durable differentiators may be organisational, how effectively companies deploy, fine-tune, and govern these systems, rather than purely model-centric.

A prudent stance, therefore, is cautiously optimistic: ready to exploit genuine improvements, mindful of persistent limitations, and alert to the possibility that the next true inflection point may arise from an altogether different approach.