OpenAI Just Dropped Two Open-Source AI Models That Run Locally—And Rival Paid Alternatives

OpenAI shakes up the AI landscape with not one, but two open-source models that deliver premium performance—without the premium price tag.

Local power, unleashed. These models ditch the cloud, running directly on your hardware while matching the capabilities of their paid counterparts. No subscriptions, no gatekeeping—just raw AI potential in your hands.

Wall Street’s probably sweating. Another ‘disruptive’ tech that won’t fit neatly into their quarterly growth projections.

The catch? Zero hand-holding. OpenAI’s throwing these over the fence—it’s up to developers to make them sing. Early benchmarks show near-parity with closed models, but the real test begins now.

One thing’s clear: The AI arms race just went open season. And the incumbents? They’re officially on notice.

Image: OpenAI

Image: OpenAI

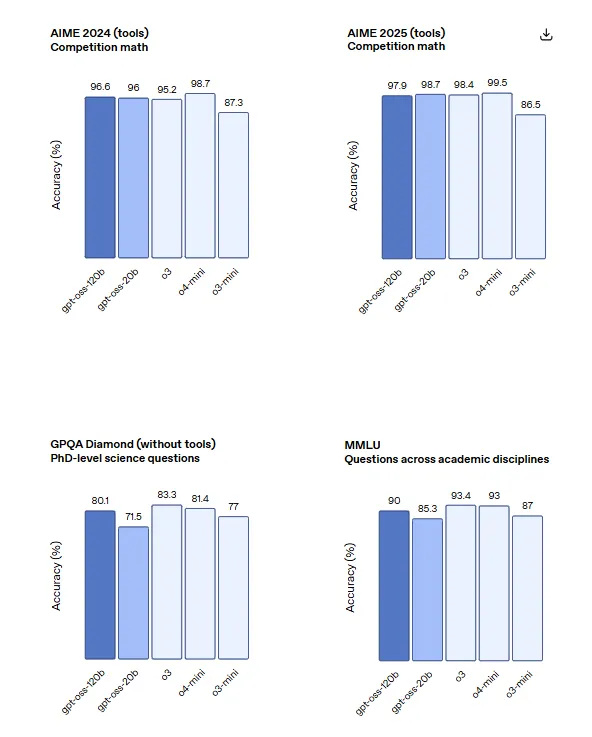

The smaller gpt-oss-20b matched or exceeded o3-mini across these benchmarks despite its size. It scored 2516 Elo on Codeforces with tools, reached 95.2% on AIME 2024, and hit 42.5% on HealthBench—all while fitting in memory constraints that WOULD make it viable for edge deployment.

Both models support three reasoning effort levels—low, medium, and high—that trade latency for performance. Developers can adjust these settings with a single sentence in the system message. The models were post-trained using processes similar to o4-mini, including supervised fine-tuning and what OpenAI described as a "high-compute RL stage."

But don’t think just because anyone can modify those models at will, you’ll have an easy time. OpenAI filtered out certain harmful data related to chemical, biological, radiological, and nuclear threats during pre-training. The post-training phase used deliberative alignment and instruction hierarchy to teach refusal of unsafe prompts and defense against prompt injections.

In other words, OpenAI claims to have designed its models to make them so safe, they cannot generate harmful responses even after modifications.

Eric Wallace, an OpenAI alignment expert, revealed the company conducted unprecedented safety testing before release. "We fine-tuned the models to intentionally maximize their bio and cyber capabilities," Wallace posted on X. The team curated domain-specific data for biology and trained the models in coding environments to solve capture-the-flag challenges.

Today we release gpt-oss-120b and gpt-oss-20b—two open-weight LLMs that deliver strong performance and agentic tool use.

Before release, we ran a first of its kind safety analysis where we fine-tuned the models to intentionally maximize their bio and cyber capabilities 🧵 pic.twitter.com/err2mBcggx

— Eric Wallace (@Eric_Wallace_) August 5, 2025

The adversarially fine-tuned versions underwent evaluation by three independent expert groups. "On our frontier risk evaluations, our malicious-finetuned gpt-oss underperforms OpenAI o3, a model below Preparedness High capability," Wallace stated. The testing indicated that even with robust fine-tuning using OpenAI's training stack, the models couldn't reach dangerous capability levels according to the company's Preparedness Framework.

That said, the models maintain unsupervised chain-of-thought reasoning, which OpenAI said is of paramount importance for keeping a wary eye on the AI. "We did not put any direct supervision on the CoT for either gpt-oss model," the company stated. "We believe this is critical to monitor model misbehavior, deception and misuse."

OpenAI hides the full chain of thought on its best models to prevent competition from replicating their results—and to avoid another DeepSeek event, which now can happen even easier.

The models are available on Hugginface. But as we said in the beginning, you’ll need a behemoth of a GPU with at least 80GB of VRAM (like the $17K Nvidia A100) to run the version with 120 billion parameters. T

he smaller version with 20 billion parameters will require at least 16GB of VRAM (like the $3K Nvidia RTX 4090) on your GPU, which is a lot—but also not that crazy for a consumer-grade hardware.