Microsoft’s AI Now Diagnoses Like Dr. House—And Bills Like a Warehouse Club

Healthcare just got a Silicon Valley makeover—and your wallet might not survive the encounter.

Microsoft's new diagnostic AI cracks medical mysteries with Sherlock-level deduction... then slaps you with a receipt straight out of a bulk grocery chain. The algorithm spots rare conditions faster than any human physician—provided you've got the subscription plan to unlock its full potential.

Early adopters report 92% diagnostic accuracy (with 100% more surprise billing). The system cross-references symptoms against 14 million case studies in milliseconds—then helpfully suggests financing options when your insurance balks.

One ICU director raves: 'It caught a zebra diagnosis three residents missed.' The CFO added: 'We're finally monetizing those pesky HIPAA-compliant data lakes.'

As hospitals race to implement the tech, critics warn the real pathology might be in the pricing model—where 'AI-assisted care' somehow costs more than the human alternative. But hey, at least your prior authorization gets denied at machine-learning speeds now.

How Microsoft’s Medical Council Works

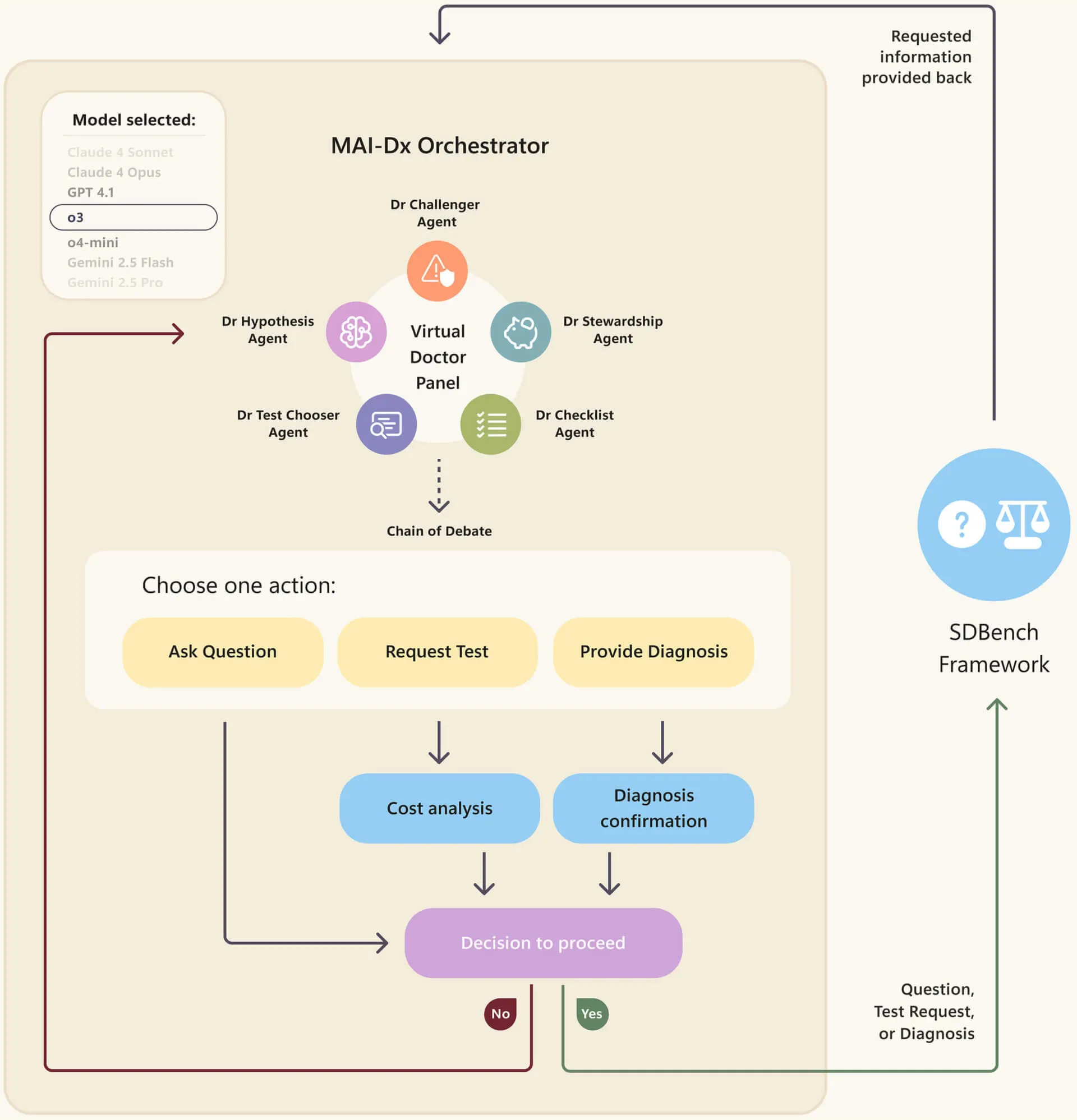

MAI-DxO works like a medical dream team trapped in a computer. The system tackles cases through what Microsoft calls the Sequential Diagnosis Benchmark, or SDBench.

Instead of multiple-choice questions like traditional medical AI tests, it mirrors how doctors actually work: starting with limited information about a patient, asking follow-up questions, ordering tests, and adjusting theories as new data arrives.

Each test incurs a cost in virtual money, forcing the AI to balance thoroughness against healthcare spending.

In other words, it basically simulates a medical council debating a case, with different models playing different roles. The models debate, disagree, and eventually reach a consensus, just like your physicians WOULD if you were a challenging case to study.

In one configuration, MAI-DxO achieved 80% accuracy while spending $2,397 per case, approximately 20% less than the $2,963 that physicians typically spend.

At peak performance, it achieved 85.5% accuracy at a cost of $7,184 per case. By comparison, OpenAI's standalone o3 model achieved 78.6% accuracy but cost $7,850.

The virtual physician panel includes Dr. Hypothesis, who maintains a running list of the three most likely diagnoses using Bayesian probability methods.

Dr. Test-Chooser selects up to three diagnostic tests per round, aiming for maximum information gain.

Dr. Challenger acts as the contrarian, seeking evidence that contradicts the prevailing theory. Dr. Stewardship vetoes expensive tests with low diagnostic value.

Meanwhile, Dr. Checklist ensures all test names are valid and the team's reasoning stays consistent.

Microsoft tested the system on cases published in the New England Journal of Medicine between 2024 and 2025, after the AI's training cutoff date, eliminating any possibility the model had memorized the answers.

The studies were difficult cases that required thorough examination to be properly diagnosed.

The 21 physicians Microsoft recruited for comparison had between 5 and 20 years of experience, with a median of 12 years.

They worked without access to colleagues, textbooks, or AI assistance to ensure a fair comparison of raw diagnostic ability. They reported a 20% success rate on these admittedly difficult cases.

The system operates in several modes. "Instant Answer" provides a diagnosis based solely on initial information for $300—the cost of one physician visit.

"Question Only" allows follow-up questions without ordering tests. "Budgeted" tracks costs with a maximum spending limit. "No Budget" gives the panel free rein, while "Ensemble" runs multiple panels and aggregates their conclusions for maximum accuracy.

The Future of Medicine?

MAI-DxO represents Microsoft's broader push into consumer health AI.

The company reports over 50 million health-related sessions daily across its Bing and Copilot products. From knee pain searches to urgent care lookups, Microsoft sees search engines and AI assistants becoming the new front door for healthcare.

Of course, this is just one more step in a very long timeline of medical tech.

For context, Stanford's MYCIN system diagnosed bacterial infections in the 1970s, and Google's AMIE simulated doctor-patient conversations just last year.

Microsoft developed MAI-DxO as a model-agnostic system, meaning it can work with AI models from different companies.

In testing, it boosted performance across models from OpenAI, Google, Anthropic, Meta, and others by an average of 11%. The improvement was statistically significant across all tested models.

Dr. Dominic King and Harsha Nori, who led the research at Microsoft AI, emphasized in a blog post that the technology remains a research demonstration.

"Important challenges remain before generative AI can be safely and responsibly deployed across healthcare," they wrote. The system excels at complex diagnostic challenges but needs testing on routine cases.

Microsoft plans to submit the research for peer review and is working with healthcare organizations to validate the approach in clinical settings.

The company has made clear that any deployment would require "rigorous safety testing, clinical validation, and regulatory reviews."

For now, MAI-DxO remains confined to research labs. But with diagnostic errors contributing to nearly 10% of patient deaths and affecting millions annually, Microsoft's virtual physician panel represents another step toward AI-assisted healthcare.

The five-doctor AI team might diagnose better than 21 human physicians combined, but it is still too early to see a mainstream implementation.

Microsoft says AI won't replace doctors; it will augment them. The 21 physicians who scored 20% on those brutal NEJM cases are probably hoping that's true.

Edited by Sebastian Sinclair and Josh Quittner