Spain’s Multiverse Computing Secures $215M to Miniaturize AI for Smartphones—Big Brains, Tiny Models

Forget cloud-dependent giants—Spain’s Multiverse Computing just pocketed $215M to cram AI into your pocket. The goal? Shrinking models small enough for smartphones without the performance hit.

Why it matters: The race to decentralize AI just got hotter. While Big Tech hoards GPUs, startups like Multiverse are betting on efficiency—cutting costs, bypassing data centers, and (theoretically) dodging the next ''AI winter'' hype cycle.

The cynical take: Another nine-figure round for ''AI democratization''—because nothing says ''for the people'' like VCs banking on enterprise licensing deals. But if they deliver, your phone might finally stop autocorrecting ''crypto'' to ''crisis.''

The Physics Behind the Compression

Applying quantum-inspired concepts to tackle one of AI’s most pressing issues sounds improbable—but if the research holds up, it’s real.

Unlike traditional compression that simply cuts neurons or reduces numerical precision, CompactifAI uses tensor networks—mathematical structures that physicists developed to track particle interactions without drowning in data.

The process works like an origami for AI models: weight matrices get folded into smaller, interconnected structures called Matrix Product Operators.

Instead of storing every connection between neurons, the system preserves only meaningful correlations while discarding redundant patterns, like information or relationships that are repeated over and over again.

Multiverse discovered that AI models aren''t uniformly compressible. Early layers prove fragile, while deeper layers—recently shown to be less critical for performance—can withstand aggressive compression.

This selective approach lets them achieve dramatic size reductions where other methods fail.

After compression, models undergo brief "healing"—retraining that takes less than one epoch thanks to the reduced parameter count. The company claims this restoration process runs 50% faster than training original models due to decreased GPU-CPU transfer loads.

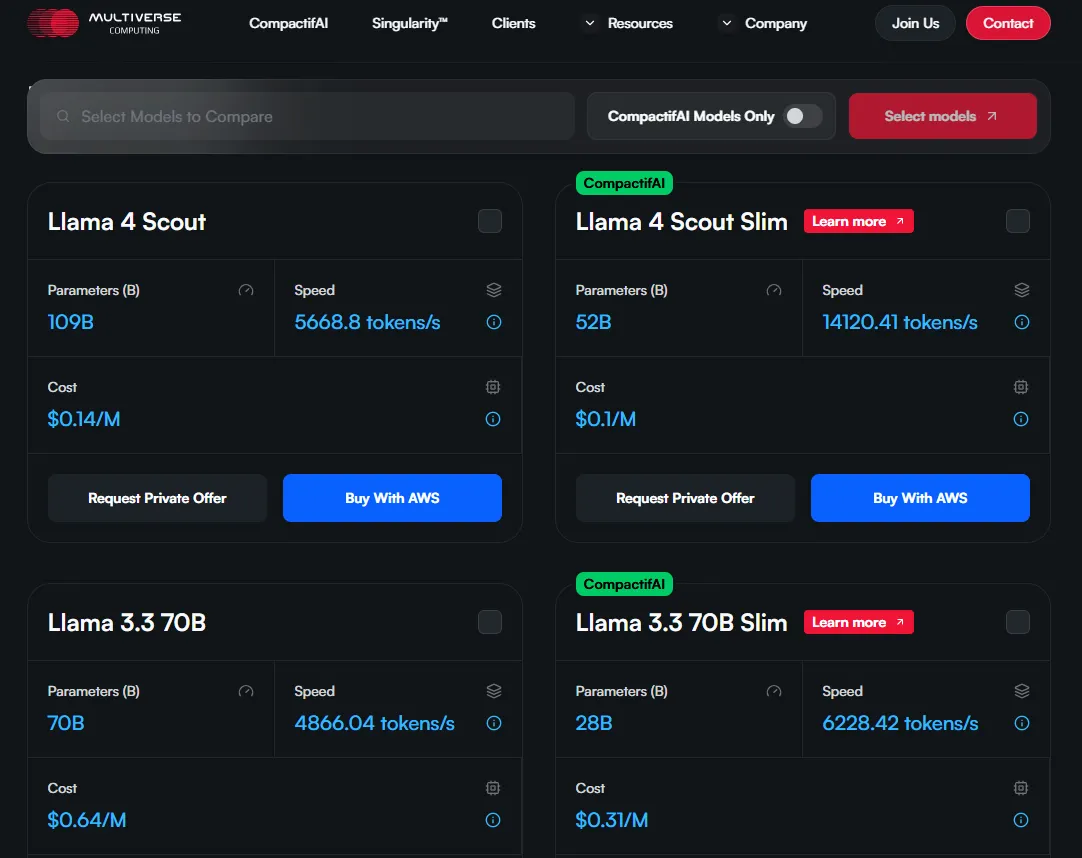

Long story short—per the company’s own offers—you start with a model, run the Compactify magic, and end up with a compressed version that has less than 50% of its parameters, can run at twice the inference speed, costs a lot less, and is just as capable as the original.

In its research, the team shows you can reduce the Llama-2 7B model’s memory needs by 93%, cut the number of parameters by 70%, speed up training by 50%, and speed up answering (inference) by 25%—while only losing 2–3% accuracy.

Traditional shrinking methods like quantization (reducing the precision like using fewer decimal places), pruning (cutting out less important neurons entirely, like trimming dead branches from a tree), or distillation techniques (training a smaller model to mimic a larger one''s behavior) are not even close to achieving these numbers.

Multiverse already serves over 100 clients including Bosch and Bank of Canada, applying their quantum-inspired algorithms beyond AI to energy optimization and financial modeling.

The Spanish government co-invested €67 million in March, pushing total funding above $250 million.

Currently offering compressed versions of open-source models like Llama and Mistral through AWS, the company plans to expand to DeepSeek R1 and other reasoning models.

Proprietary systems from OpenAI or Claude remain obviously off-limits since they are not available for tinkering or study.

The technology''s promise extends beyond cost savings measures. HP Tech Ventures'' involvement signals interest in edge AI deployment—running sophisticated models locally rather than cloud servers.

"Multiverse’s innovative approach has the potential to bring AI benefits of enhanced performance, personalization, privacy and cost efficiency to life for companies of any size," Tuan Tran, HP''s President of Technology and Innovation, said.

So, if you find yourself running DeepSeek R1 on your smartphone someday, these dudes may be the ones to thank.

Edited by Josh Quittner and Sebastian Sinclair