AI Model Now Capable of Full-Blown Terror Screams—Because That’s What the Market Demanded

Breakthrough in artificial emotional response systems cuts straight to primal fear. No more polite error chimes—this neural net bypasses decorum with blood-curdling shrieks. Investors already salivating over horror-themed customer service applications. (Bonus jab: Probably gets a $10B valuation before proving it can stop screaming.)

The race to make emotional AI

AI platforms are increasingly focused on making their text-to-speech models show emotion, addressing a missing element in human-machine interaction. However, they are not perfect and most of the models—open or closed—tend to create an uncanny valley effect that diminishes user experience.

We have tried and compared a few different platforms that focus on this specific topic of emotional speech, and most of them are pretty good as long as users get into the right mindset and know their limitations. However, the technology is still far from convincing.

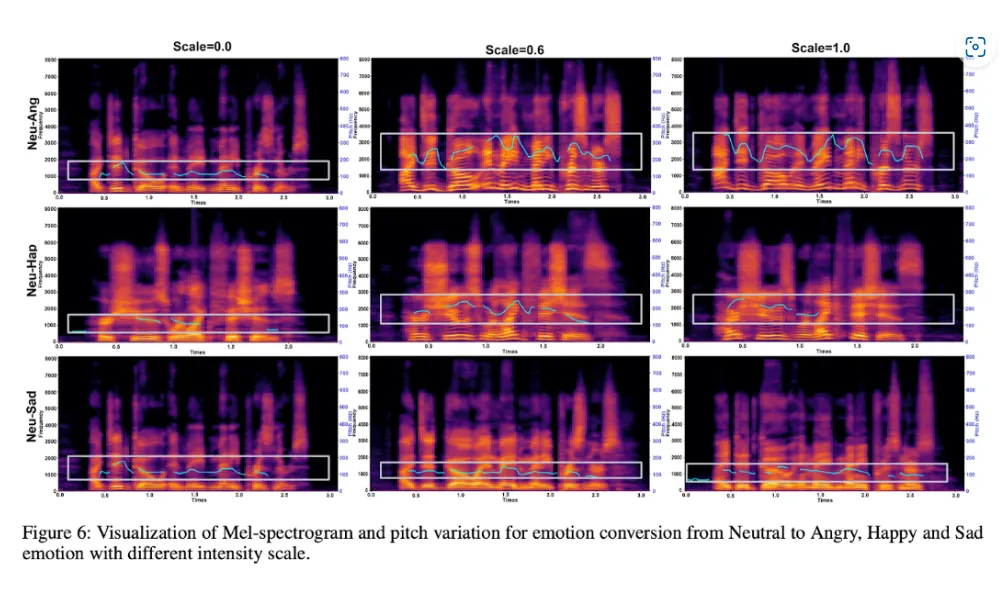

To tackle this problem, researchers are employing various techniques. Some train models on datasets with emotional labels, allowing AI to learn the acoustic patterns associated with different emotional states. Others use deep neural networks and large language models to analyze contextual cues for generating appropriate emotional tones.

ElevenLabs, one of the market leaders, tries to interpret emotional context directly from text input, looking at linguistic cues, sentence structure, and punctuation to infer the appropriate emotional tone. Its flagship model, Eleven Multilingual v2, is known for its rich emotional expression across 29 languages.

Meanwhile, OpenAI recently launched "gpt-4o-mini-tts" with customizable emotional expression. During demonstrations, the firm highlighted the ability to specify emotions like "apologetic" for customer support scenarios, pricing the service at 1.5 cents per minute to make it accessible for developers. Its state of the art Advanced Voice mode is good at mimicking human emotion, but is so exaggerated and enthusiastic that it could not compete in our tests against other alternatives like Hume.

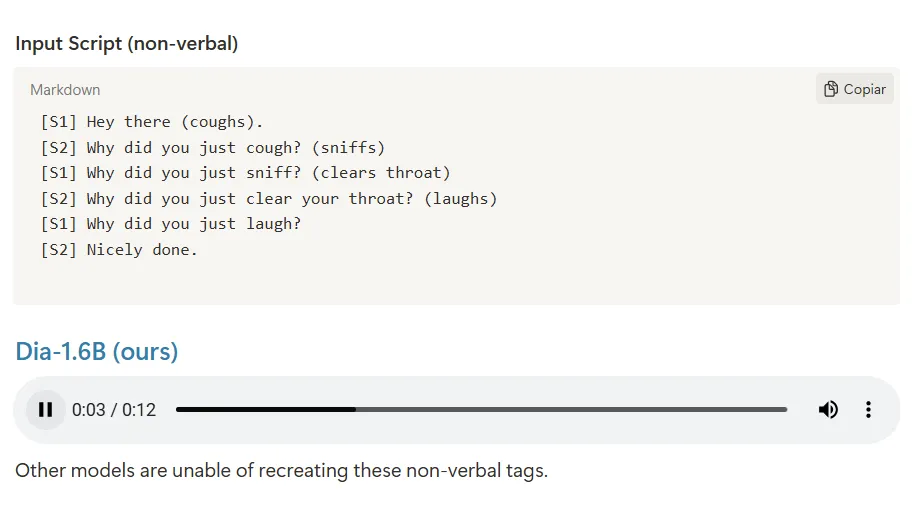

Where Dia-1.6B potentially breaks new ground is in how it handles nonverbal communications. The model can synthesize laughter, coughing, and throat clearing when triggered by specific text cues like "(laughs)" or "(coughs)"—adding a layer of realism often missing in standard TTS outputs.

Beyond Dia-1.6B, other notable open-source projects include EmotiVoice—a multi-voice TTS engine that supports emotion as a controllable style factor—and Orpheus, known for ultra-low latency and lifelike emotional expression.

It’s hard to be human

But why is emotional speech so hard? After all, AI models stopped sounding robotic a long time ago.

Well, it seems like naturality and emotionality are two different beasts. A model can sound human and have a fluid, convincing tone, but completely fail at conveying emotion beyond simple narration.

“In my view, emotional speech synthesis is hard because the data it relies on lacks emotional granularity. Most training datasets capture speech that is clean and intelligible, but not deeply expressive,” Kaveh Vahdat, CEO of the AI video generation company RiseAngle, told Decrypt. “Emotion is not just tone or volume; it is context, pacing, tension, and hesitation. These features are often implicit, and rarely labeled in a way machines can learn from.”

“Even when emotion tags are used, they tend to flatten the complexity of real human affect into broad categories like ’happy’ or ’angry’, which is far from how emotion actually works in speech,” Vahdat argued.

We tried Dia, and it is actually good enough. It generated around one second of audio per second of inference, and it does convey tonal emotions, but is so exaggerated that it doesn’t feel natural. And this is the key of the whole problem—models lack so much contextual awareness that it is hard to isolate a single emotion without additional cues and make it coherent enough for humans to actually believe it is part of a natural interaction

The "uncanny valley" effect poses a particular challenge, as synthetic speech cannot compensate for a neutral robotic voice simply by adopting a more emotional tone.

And there are more technical hurdles abound. AI systems often perform poorly when tested on speakers not included in their training data, an issue known as low classification accuracy in speaker-independent experiments. Real-time processing of emotional speech requires substantial computational power, limiting deployment on consumer devices.

Data quality and bias also present significant obstacles. Training AI for emotional speech requires large, diverse datasets capturing emotions across demographics, languages, and contexts. Systems trained on specific groups may underperform with others—for instance, AI trained primarily on Caucasian speech patterns might struggle with other demographics.

Perhaps most fundamentally, some researchers argue that AI cannot truly mimic human emotion due to its lack of consciousness. While AI can simulate emotions based on patterns, it lacks the lived experience and empathy that humans bring to emotional interactions.

Guess being human is harder than it seems. Sorry, ChatGPT.