Nearly All Coders Now Use AI—But Nobody Trusts It, Google Study Reveals

The AI revolution hits programming—with a massive trust gap.

Google's latest research exposes the industry's dirty secret: developers deploy AI tools daily while maintaining deep skepticism about their reliability.

The Adoption Paradox

Nearly every coder now integrates AI assistance into their workflow. They're using these tools to automate repetitive tasks, generate code snippets, and debug faster than ever before.

Yet the same developers who rely on AI express significant doubts about output quality, security implications, and long-term maintainability of AI-generated code.

Trust Deficit Grows

Teams report double-checking every AI suggestion, treating outputs as rough drafts rather than finished products. The technology cuts development time but introduces new verification overhead.

Some organizations bypass AI for critical systems entirely—opting for traditional coding methods when building financial infrastructure or security-sensitive applications.

The verification process often takes longer than writing code from scratch, creating a productivity paradox that mirrors Wall Street's obsession with automation that requires more human oversight than manual work.

Image: Google

Image: Google

Despite the lack of trust, over 80% of surveyed developers reported that AI enhanced their work efficiency, while 59% noted improvements in code quality.

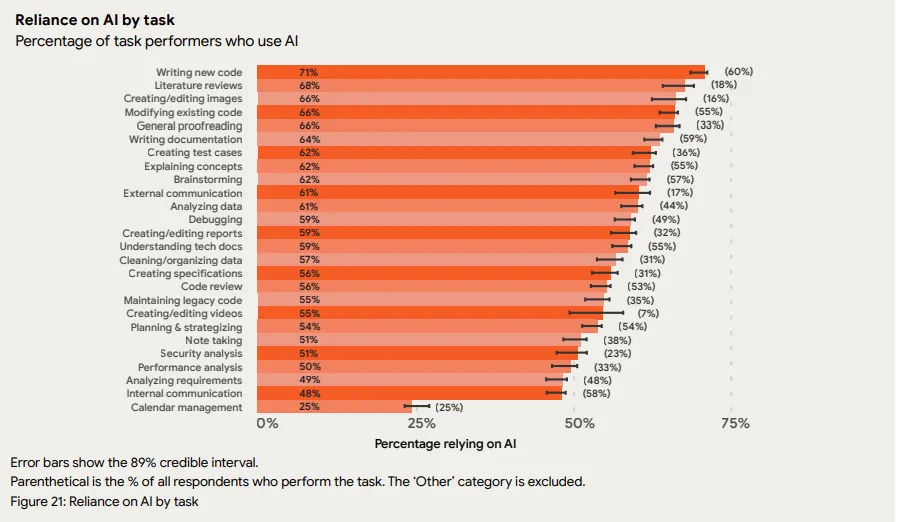

However, here's where things get peculiar: 65% of respondents described themselves as heavily reliant on these tools, despite not fully trusting them.

Among that group, 37% reported "moderate" reliance, 20% said "a lot," and 8% admitted to "a great deal" of dependence.

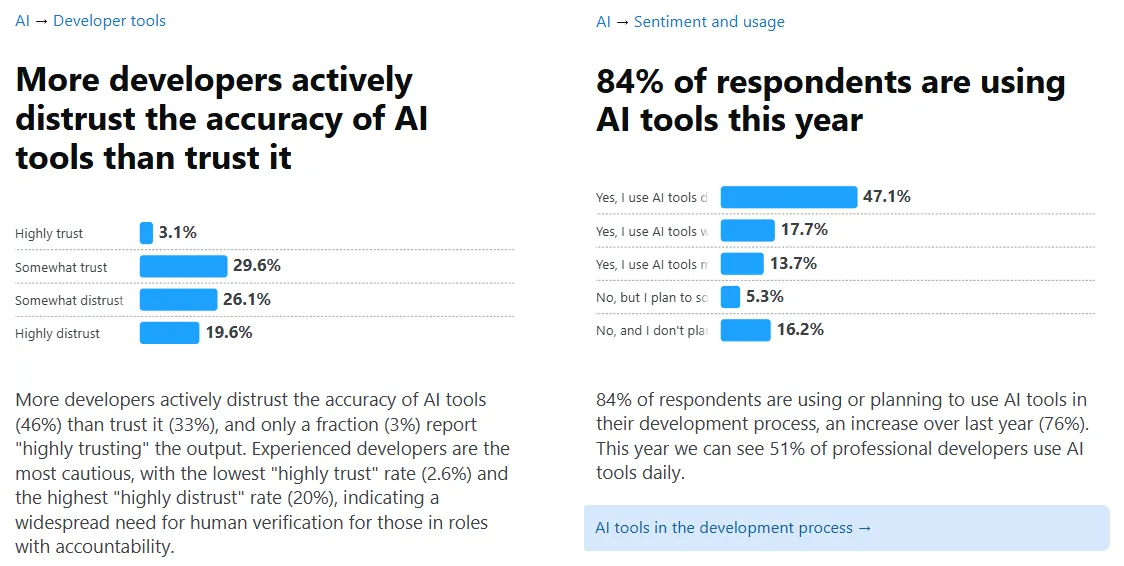

This trust-productivity paradox aligns with findings from Stack Overflow's 2025 survey, where distrust in AI accuracy increased from 31% to 46% in just one year, despite the high adoption rates of 84% for the year.

Developers treat AI like a brilliant but unreliable coworker—useful for brainstorming and grunt work, but everything needs double-checking.

DORA: Pushing for AI-Native Jobs

Google's response involves more than just documenting the trend.

On Tuesday, the company unveiled its DORA AI Capabilities Model, a framework that identifies seven practices designed to help organizations harness the value of AI without incurring risks.

The model advocates for user-centric design, clear communication protocols, and what Google refers to as "small-batch workflows"—essentially, avoiding uncontrolled AI operation without supervision.\

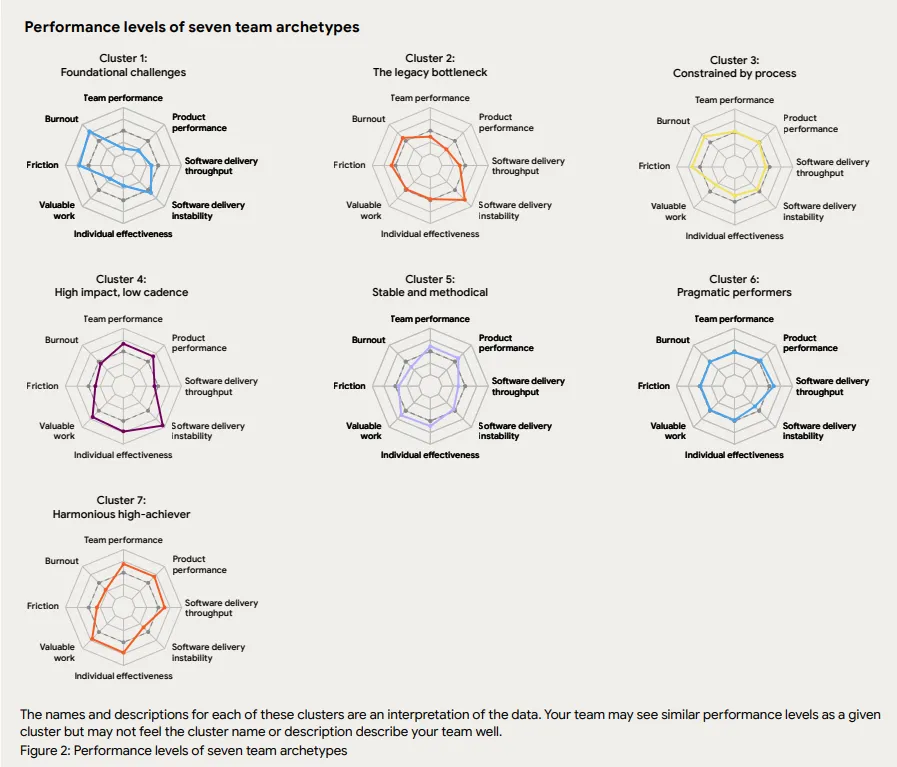

The report also introduces team archetypes ranging from "Harmonious high-achievers" to groups stuck in a "Legacy bottleneck."

These profiles emerged from an analysis of how different organizations handle AI integration. Teams with strong existing processes saw AI amplify their strengths. Fragmented organizations watched AI expose every weakness in their workflow.

The full State of AI-assisted Software Development report and the companion DORA AI Capabilities Model documentation are available through Google Cloud's research portal.

The materials include prescriptive guidance for teams looking to be more proactive in their adoption of AI technologies—assuming anyone trusts them enough to implement them.