Why Simply Plugging OpenAI into DeFi Falls Short of Revolutionizing Finance

AI meets DeFi—sounds like a match made in crypto heaven? Think again.

While the hype train chugs along, slapping OpenAI onto decentralized finance protocols isn’t the magic bullet everyone’s betting on. Here’s why.

The Illusion of Synergy

DeFi’s promise—cutting out middlemen—clashes with AI’s opaque decision-making. Smart contracts demand transparency; black-box algorithms? Not so much.

Liquidity ≠ Intelligence

Throwing LLMs at yield farming doesn’t solve DeFi’s core issues: oracle vulnerabilities, MEV, or that pesky human habit of rug-pulling. AI can’t audit code—yet.

VCs Love Buzzwords, Users Need Utility

Another case of ‘narrative-driven development’—because nothing pumps a token like an AI whitepaper. Meanwhile, real yields still trail TradFi. Oops.

The future? Maybe. The present? Just another overengineered way to lose money faster.

Beyond OpenAI Plugins: Why On-Chain Intelligence Matters

Most crypto AI projects today market themselves as “OpenAI + DeFi” integrations by connecting external models to smart contracts. But Ram Kumar told Cryptonews that this barely scratches the surface:

Most ‘AI + DeFi’ projects stop at connecting external models to smart contracts… Without verifiable data attribution, transparent model governance, and on-chain coordination of model evolution, these integrations are little more than interface layers.

He points out that even powerful models like OpenAI rely entirely on their training data, yet data contributors are rarely recognized or incentivized:

These critiques cut to the Core of crypto AI hype. Simply plugging an OpenAI model into a smart contract doesn’t decentralize intelligence. It keeps systems reliant on opaque, off-chain processes. True on-chain AI requires data attribution, governance mechanisms, and agent coordination built directly into blockchain infrastructure. This vision shifts data from a passive resource to an active, rewarded asset class.

Attribution allows us to measure the influence of each dataset on model behavior, creating accountability and fairness across the entire AI pipeline.

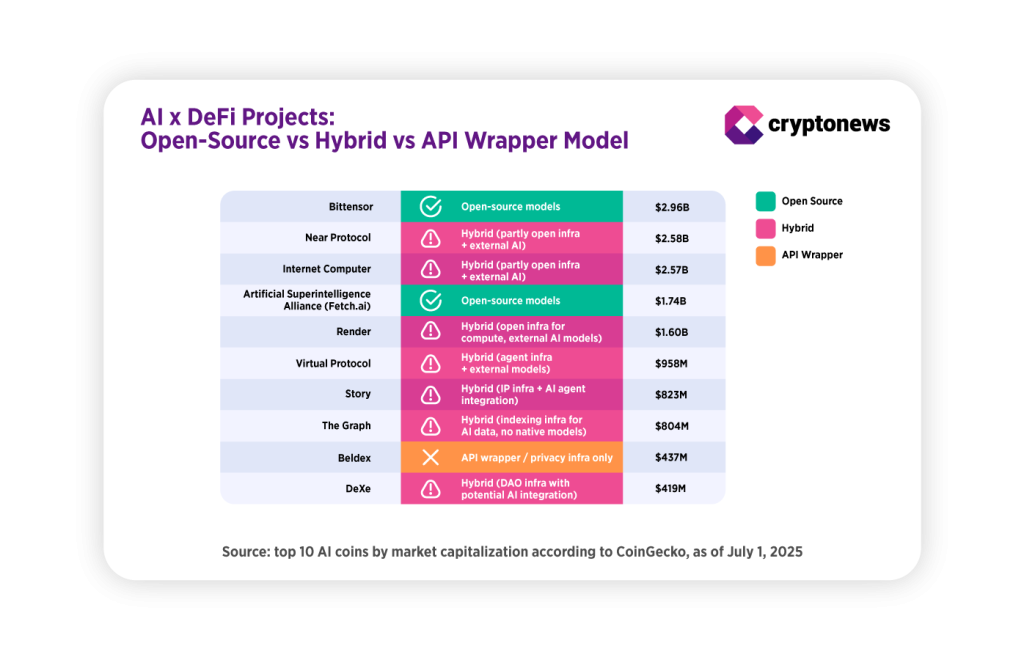

Our model type data, based on CoinGecko and WHITE paper of projects, shows that fully open-source AI remains rare even among leading projects, with most using hybrid structures that integrate external models like OpenAI while keeping critical components off-chain.

From OpenAI Plugins to Active Agents

AI agents aren’t just about automating tasks anymore. Kumar envisions them as active DAO participants:

AI agents can transition from passive automation tools to active participants… proposing ideas, evaluating decisions, and negotiating outcomes.

However, he warns that their actions must be fully auditable and backed by transparent datasets to maintain accountability.

Verifiability will also be critical for cross-protocol integration. He added: “It allows these agents to operate with clear provenance, where their outputs can be traced back to the data and logic that informed them.”

If AI agents start proposing or negotiating DAO decisions, transparency becomes essential. Without it, DAOs risk introducing opaque decision-making that contradicts decentralization. In crypto’s trust-minimized environment, agent outputs must remain traceable to avoid black-box risks within financial or governance protocols.

What Could Go Wrong

Kumar expects deeper adoption to eventually reach infrastructure-level applications:

Deeper adoption will extend into infrastructure-level use cases, such as validators optimizing resource allocation, protocols using AI for governance execution, and decentralized training systems coordinating directly on-chain.

Still, he warns that opaque models making unaccountable decisions pose the biggest risk:

Without proper attribution, economic value can concentrate unfairly while contributors remain invisible.

Flawed AI outputs could trigger unexpected financial losses in DeFi or trading. Regulators may scrutinize AI systems that can’t prove how decisions are made or where data comes from. Reputationally, projects lacking contributor recognition or transparent governance risk eroding trust in decentralization itself.

Token utility data shows that while AI project market caps remain high, many tokens are limited to governance or payment roles instead of powering decentralized AI models and compute.

While AI tokens are surging, Kumar questions their real function:

Tokens only make sense when they serve a fundamental role in coordinating decentralized systems… If a token exists solely for speculative value or gated access, it does little to advance decentralized AI.

Investors may need to ask whether an AI token does more than provide pay-to-use access. Sustainable decentralized AI will require incentives for data contributors, compute providers, and model governance to align within one cohesive ecosystem.

Opportunities: Where Crypto AI Shows Real Utility

Crypto AI agents are already showing promise in areas like DeFi automation, DAO proposal analysis, on-chain research, and cybersecurity. Kumar highlights early examples:

Morpheus is building Solidity models for developing smart contracts and dApps. Ambiosis is developing environmental intelligence agents using verified climate data. We are also collaborating with teams working on Web3 intelligence and cybersecurity agents, all anchored to verifiable data attribution.

Transparency is the common thread. Agents handling funds or governance decisions must remain auditable to avoid systemic risks. Early adoption will come from wallet bots and trading assistants, while protocol-level integrations will take longer due to technical and regulatory hurdles, according to Kumar:

Initial adoption will likely emerge from user-facing tools where immediate value is easy to demonstrate, such as trading bots, research assistants, and wallet agents.