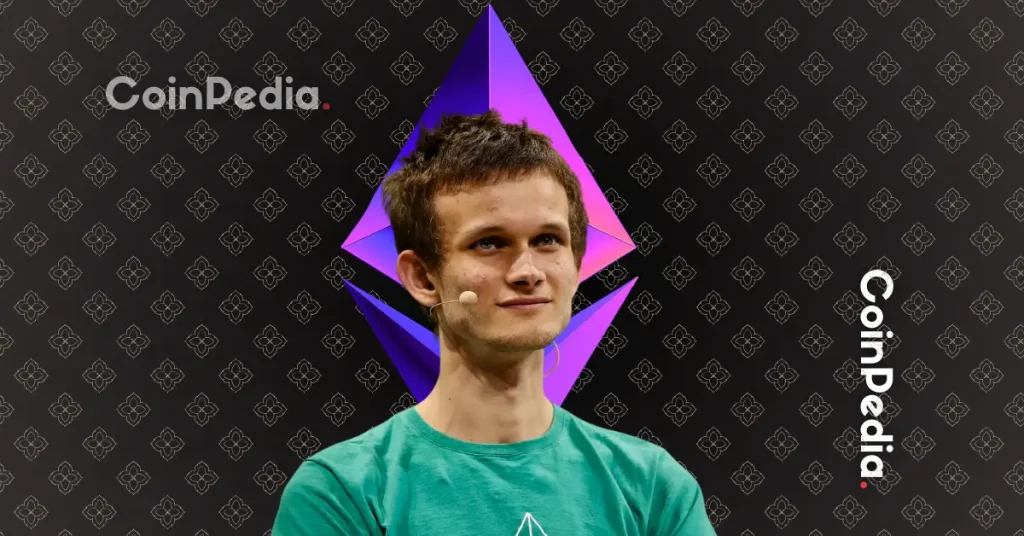

Vitalik Demands Smarter AI Oversight: Human Checks and Market Mechanisms Now

Ethereum co-founder drops blockchain wisdom bomb—AI needs guardrails, not just hype.

Human-in-the-loop meets market incentives

Vitalik Buterin just flipped the script on artificial intelligence governance. Instead of pure algorithmic control, he's pushing for hybrid oversight models that blend human judgment with economic incentives. Think decentralized oracle networks meets AI validation—with real skin in the game.

The crypto visionary argues pure AI systems risk creating 'black box' failures that could crater markets faster than a leveraged Bitcoin miner during a power outage. His solution? Layer human verification with crypto-economic stakes—where validators put capital on the line to ensure AI outputs stay truthful.

Market-based checks would let participants bet against shady AI behavior, creating financial incentives to keep systems honest. It's like prediction markets meeting AI auditing—with actual monetary consequences for bad actors.

Because let's face it—when has pure self-regulation ever worked in finance? Remember 2008? Exactly.

Buterin's framework could prevent AI catastrophes that make FTX look like a minor liquidity event. The proposal lands as AI increasingly handles everything from trading algorithms to loan approvals—with about as much oversight as a Cayman Islands crypto fund.

Time to get smarter about smart machines—before they get smarter about exploiting us.

Ethereum co-founder Vitalik Buterin warned that naive AI governance is vulnerable to exploits, like jailbreak prompts used to divert funds. He supports an info finance model where open markets allow multiple AI models, combined with human spot checks and jury reviews, to ensure diversity and faster problem solving. This approach reduces risks and improves security by balancing AI automation with human oversight, preventing manipulation and protecting decentralized governance systems.