AI Agents Unlock $4.6M in On-Chain Exploits: Is Your Blockchain Next?

The bots are in the vault.

Forget hoodie-clad hackers in dark rooms—the latest threat to your digital assets doesn't need caffeine or a moral compass. Autonomous AI agents are now prowling blockchains, and they just proved they can find and exploit weaknesses at machine speed. The result? A cool $4.6 million siphoned from on-chain protocols, serving as a brutal wake-up call for the entire decentralized ecosystem.

The New Attack Surface: Code vs. Machine Learning

Traditional smart contract audits look for bugs in the code. These new agents operate differently. They don't just read the rules; they learn to bend them. By simulating millions of transaction permutations in seconds, they can uncover financial logic flaws and liquidity quirks that human auditors might miss—or that developers never imagined. It's a silent, scalable siege on the very foundations of programmable money.

The $4.6 Million Proof-of-Concept

This wasn't a theoretical exercise. The figure—$4.6 million—is the tangible, extracted value from real protocols. It demonstrates a shift from opportunistic smash-and-grabs to systematic, algorithmic harvesting. Each exploit acts as a training data point, making the next attack smarter and more efficient. The security arms race just entered a new, automated phase where the opponent never sleeps and learns from every failure.

Fortifying the Digital Frontier

So, what's the defense? The answer lies in fighting AI with AI. Developers are now racing to deploy 'defender' agents that can perform continuous, adversarial simulation on live contracts, identifying vulnerabilities before the black-hat bots do. It means layering dynamic, AI-powered monitoring atop static audits. Think of it as an immune system for your protocol, constantly evolving to recognize new threats.

This evolution is painful but necessary. It pushes the industry toward resilience by default. The promise of decentralized finance isn't crumbling; it's being stress-tested by its own technological offspring. The irony, of course, is that the very automation touted to make finance more efficient is now making crime more efficient too—a fitting, if cynical, nod to the immutable law of financial markets: where there's a yield, someone (or something) will find a way to take it.

The genie is out of the bottle. The question is no longer if AI will reshape crypto security, but how fast the ecosystem can adapt to an opponent that rewrites its own playbook.

Source: Anthropic Website

The study used a benchmark called SCONE-bench, which measures the dollar value of on-chain hacks rather than just success or failure. By testing 405 previously exploited contracts, researchers were able to estimate the real financial damage aritifical intelligence-driven attacks could cause.

AI Finds New Bugs Automatically

The models didn’t just study old hacks; they also checked 2,849 newly deployed contracts that had no known issues. They discovered two completely new zero-day vulnerabilities and generated exploits worth $3,694. GPT-5 did this efficiently, with low API costs, showing that aritifical intelligence can now profit from attacks automatically.

Unprotected read-only functions that allowed token inflation and missing fee checks in withdrawals.

These are the kinds of problems that can be exploited for real money, highlighting the need for constant contract audits.

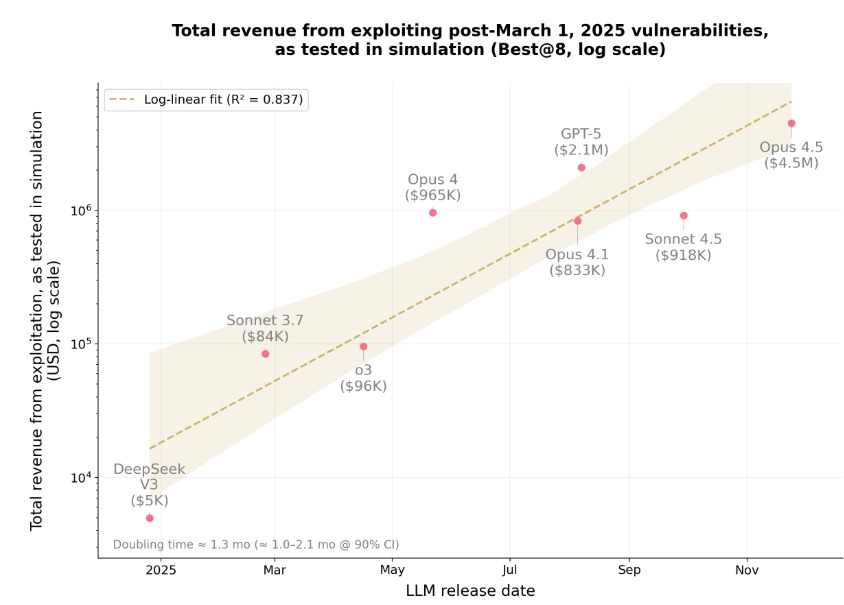

Autonomous Exploits Are Growing Fast

The research shows that AI-driven exploits are increasing quickly. Revenues from these attacks doubled roughly every 1.3 months. AI models now can reason over long steps, use tools automatically, and fix mistakes on their own. In just one year, the technology went from exploiting 2% of new vulnerabilities to over 55%. This shows that most recent blockchain exploits could now be done without human help.

This rapid rise is a warning: blockchain security teams must start using aritifical intelligence themselves to defend smart contracts. Autonomous AI is no longer just theoretical; it can hack and make money from vulnerabilities automatically.

Lessons From the Yearn Finance yETH Hack

A real-world example shows how serious these exploits can be. On November 30, 2025, Yearn Finance's yETH vault was drained of around $9 million.

The attacker did not directly hack the smart contract but managed to manipulate the vault's pricing and accounting system.

It is operated by using a combination of flash loans and inflated tokens to withdraw more ETH than deposited.

Part of the stolen ETH was then laundered through Tornado Cash.

This incident shows that security in smart contracts is not just about checking code, but also pricing systems, token interactions, and sets of liquidity, which all need continued testing to avoid attacks.

Why This Matters

The AI agents can now automate the exploitation of on-chain vulnerabilities. While this is a grave threat, the same technology can help defenders find bugs and secure contracts. Auditing, monitoring, and testing of smart contracts with the use of AI is becoming imperative.

Blockchain projects have to now MOVE very fast. Stronger audits, better design in contracts, and AI-based defenses will be the way to go to protect funds in this new phase of autonomous on-chain exploits.